When things go wrong, don't panic. Learn!

On incidents and operational responsibility with a Platform Product.

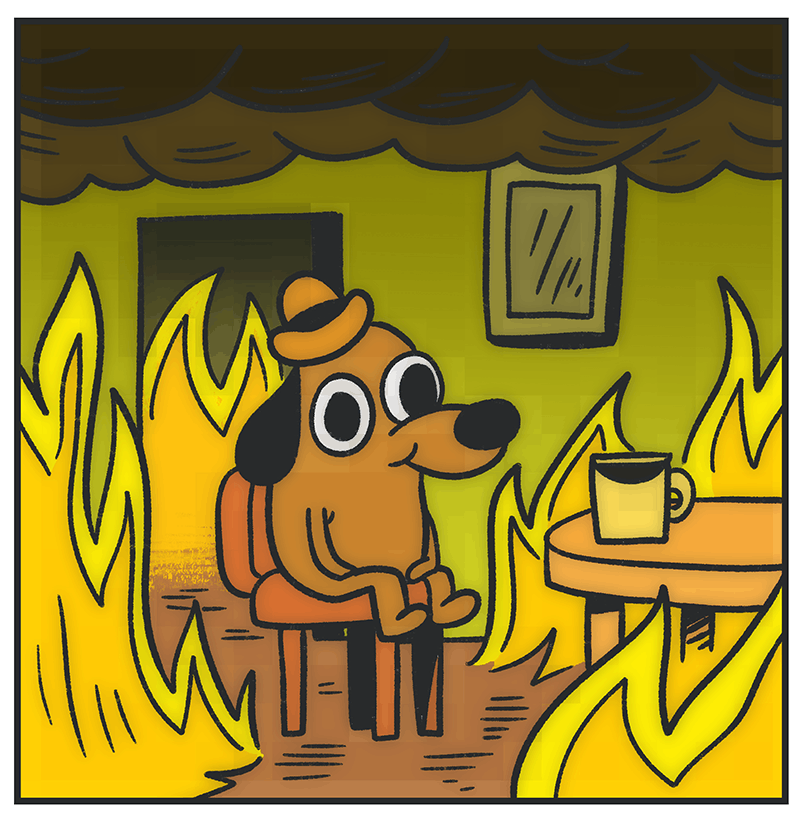

It’s 2am on a quiet Sunday night. The product managers are sleeping all snug in their beds, but somewhere, an engineer springs to life to PagerDuty’s obnoxious singing telling her that “The Server’s on Fiiiiiireeee”. Uh oh, latencies on the platform have skyrocketed and an increasing percentage of requests are failing. Performance has degraded to the point that the platform’s internal users are being affected, in some cases causing real end-user problems upstream. We have an incident. The on-call engineer rubs her eyes, puts on a pot of coffee and pulls out her computer.

In these situations the engineer on-call has two main responsibilities. First, she communicates to users and stakeholders that something is wrong. She creates an incident ticket, writes that 5% of requests on the platform are failing, and the 95 percentile latency is exceeding 500ms. She mentions that the investigation is ongoing and promises to provide updates with more information. She then sends emails to a distribution list of stakeholders, posts the incident ticket in the relevant slack channels, rolls up her sleeves and begins the investigation.

She logs into one of the servers.

cms-mbp:~ htopThe server slowly responds but CPU and RAM usage look fine.

cms-mbp:~ df -hOh snap, the disk is full. Let’s check the logs.

cms-mbp:~ cd /var/logcms-mbp:/var/log ls -alhOof some log files are several gigabytes. In total it looks to be about 475GB of log files. Let’s tail the open log file and see what’s happening.

cms-mbp:/var/log tail -f myservice.logJune 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: BlahblahblahJune 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah June 30 02:23:14:01 cms-mbp com.foo.bar [INFO]: Blahblahblah Hundreds of identical log lines every second saying. Ok, that looks suspicious. She wonders, “is this normal behaviour? Is this something we introduced recently?”

cms-mbp:/var/log zgrep -c * BlahblahblahOk, this particular log line didn’t show up at all until Wednesday and then over 100000 times each hour after that. This appears to be the cause for the big increase in file size. “What did we do on Wednesday?” she wonders. Looking through the git commit history, she looks at Wednesday’s only commit. The code looks fine, but she finds the suspicious log line and it’s pretty clear this is the code that is filling up the disks.

She reverts the commit, deploys and cleans up some old log files to make space on the full machines. She watches the logs and graphs for another 15 minutes. Things look normal and eventually the PagerDuty alert resolves. Phew. This is the second responsibility of the on-call engineer - putting out the fire. Notice that she doesn’t try to fix the root cause in the middle of the night! During an incident the priority is to restore your platform as soon as possible. Generally this will mean reverting some code, not making a bug fix. Now that the fire is out and the crisis is averted there is one last thing before bed. She needs to wrap up the communication. She writes some details about the nature of the incident, declares it all clear, and goes back to sleep.

We were lucky in this situation because we had proper monitoring set up to tell us how our platform was performing. We know our platform usually responds to requests in 30ms, and in this case responses took over 500ms for an extended period of time. This measurement of latency is an example of a Service Level Indicator, or an SLI. An SLI represents what performance the customers of our platform are experiencing at a given time.

We may also have established explicit expectations with our customers for what level of service they will receive. These are Service Level Objectives, or SLOs. SLOs are, by definition, more relaxed than the SLI reports under normal circumstances. Things do go wrong sometimes, some latency spikes are ‘normal’ and transient. Some are not normal and require an on-call engineer to wake up and intervene. We can’t promise that our platform will perform ‘as normal’ all the time, but we can arrange and promise to have on-call and processes in place to keep the quality of service at a level that is reasonable for our customers. This implies a necessary delta between an SLI and an SLO which gives the platform team some wiggle room to fix incidents before they have customer impact. When the quality of service your platform provides to your customers becomes worse than the SLO you promised, then you have an incident.

But if your platform isn’t mature enough to have a properly established SLO. That doesn’t mean your platform is immune to incidents. If it has users, those users have expectations. If those expectations are breached in an impactful way, you have an incident. You might notice this from your monitoring and alerting, you might notice it from customers complaining in slack. Regardless, it's time to sound the alarm.

Once the fire is out, you have a golden opportunity to learn. Technically, incidents represent quite interesting and obscure engineering problems. Your team should discuss what happened and try to understand the root cause. But for a product manager, this is also a golden opportunity to understand the impact that our platform product is having on the rest of the company.

A platform or internal facing product is at least one step removed from the end users, so the impact that an incident will have differs from use case to use case. It’s easy for an internal user to assume that a platform they depend on will not have sustained outages and ignore the possibility. This is why incident communication is so important. Our users need to recognise the failure cases, and understand the impact the incident had on their products and users. As a platform product manager, I need to understand how the users are leveraging our platform to give business value. A platform product manager in a medium to large company is probably acutely aware of a small portion of usage which is the most important, but only vaguely aware of the rest. The impact assessment of a major incident can provide the best data and insights into the broad value a platform product provides.

So after an incident, we had both a technical assessment from the engineers and an impact assessment from Product. The problem may be solved but now the learnings must live on. It’s time to analyse what happened together and learn from each other. Have a post-mortem together with your team and impacted stakeholders. Discuss the timeline. When did the incident actually begin? When did we notice? When did we mitigate it? Could we have prevented it if we had noticed earlier? Are there other mitigations we or our users should put into place to protect against this from happening in the future? Also discuss with your users the implicit or explicit (SLO) expectations they have for your platform product. They might be misaligned, which could mean changes on either side. Maybe it’s the right time to recognise those implicit expectations and make them explicit, setting an SLO. If the expectations that users have on your product are not feasible to uphold then maybe your product is wrong for their use case.

So when things go wrong, it’s easy to focus on the negatives. And the negatives may be very impactful for your product and company (although this means you’ve built an impactful product). But in any sufficiently agile tech company the speed of innovation is well worth the risk of occasional problems. And the learnings you get from these occasional problems can give such powerful insights, both technically and for your platform product. The value you and your stakeholders get from the learnings might very well outweigh the impact of the outage. Never blame an engineer for a mistake, thank them for giving you all an opportunity to learn from it. Don’t fear incidents, celebrate them.

In last weeks Product Internals podcast, Arvid and I talk about incidents, and the operational responsibility that a Platform Product Manager has. Please have a listen, and reach out to us to discuss or debate on Twitter @productinternal or at podcast@productinternals.com ! And if you’re enjoying the show so far please follow!

And if you liked this post we’d love to have you join the Product Internals community! Subscribe for a new post every Wednesday. If you find them as valuable as we find writing them please share them with your friends and colleagues!